World Models & Interactive Video

This project showcases an interactive 2D particle system built in TouchDesigner.

When the user clicks on the canvas, particles spray outward — their movement driven by directional noise.

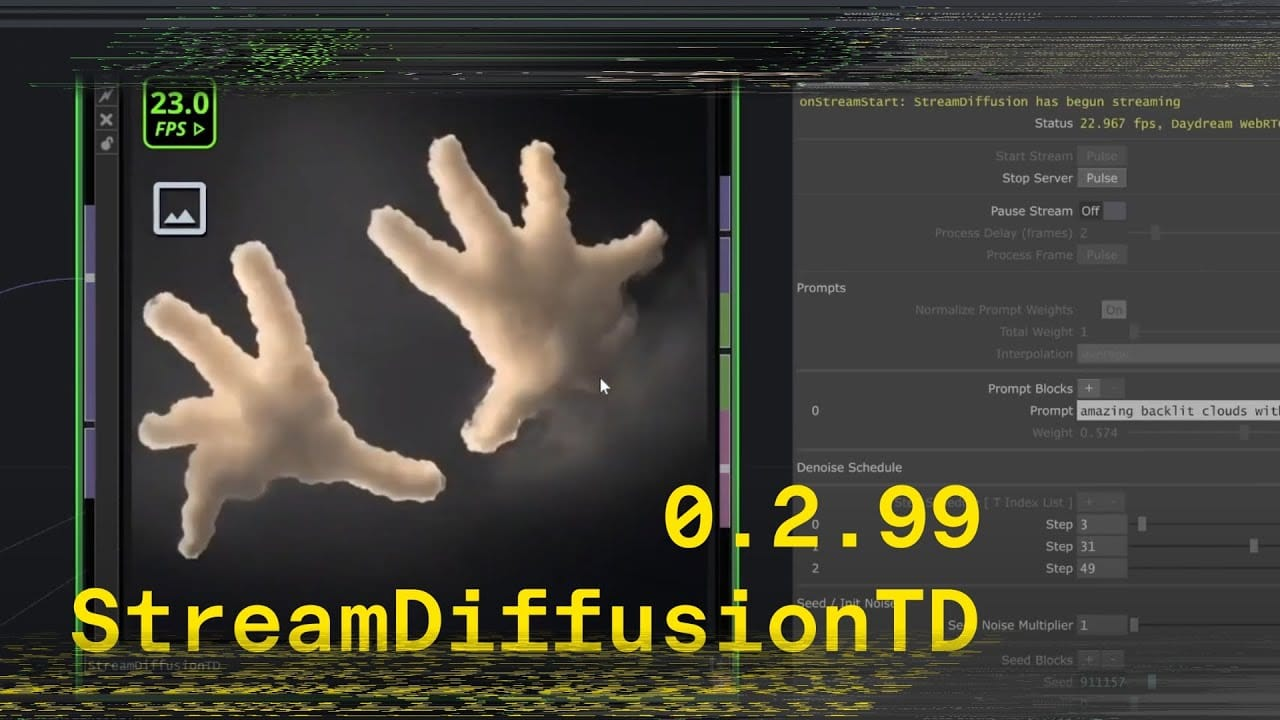

The rendered output is then processed through StreamDiffusionTD, transforming the particle motion into rich, evolving AI-generated visuals.

The interaction layer can be switched from mouse control to alternative input methods such as MediaPipe, Leap Motion, or Kinect, enabling real-time interactive installations.

StreamDiffusionTD excels in this setup — as the abstract nature of the input allows the AI to adapt fluidly to multiple visual prompts and interpretations.

Creating fluid, abstract visuals that bridge human interaction and AI imagination — where every click becomes a burst of motion and meaning.

World Models & Interactive Video

A live webcam-based Playground project that reinterprets human movement into flowing clouds

Instantly restyle your live video stream with reference images and creative prompts using the Playground.

A deep dive into StreamDiffusion, a real-time diffusion pipeline for generative art, and its integration with TouchDesigner.